Posts Tagged standards

Mapping SAMM to ISO/IEC 27034

Posted by Colin Watson in Discussion on April 7th, 2012

ISO/IEC 27034 (Application Security), which can be purchased from International Organization for Standardization (ISO) and national standards bodies, is designed to help organisations build security throughout the life cycle of applications.

There is a preview of the contents and first few pages of Part 1 on the IEC website. Part 1 presents an overview of application security and introduces definitions, concepts, principles and processes involved in application security.

The contents listing for Annex A of ISO/IEC 27034:2011 Part 1 mentions a mapping to the Microsoft Security Development Lifecycle (SDL), and in the section describing the standard’s purpose, it refers to the need to map existing software development processes to ISO/IEC 27034:

Annex A (informative) provides an example illustrating how an existing software development process can be mapped to some of the components and processes of ISO/IEC 27034. Generally speaking, an organization using any development life cycle should perform a mapping such as the one described in Annex A, and add whatever missing components or processes are needed for compliance with ISO/IEC 27034.

The contents for Part 1 shows the SDL is compared with an Organization Normative Framework (ONF) made up from ideal application security related processes and resources:

- Business context

- Regulatory context

- Application specifications repository

- Technological context

- Roles, responsibilities and qualifications

- Organisation application security control (ASC) library

- Application security life cycle reference model

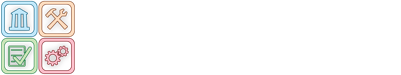

This is very useful but I wondered how a comparison with Open SAMM might look. I have therefore created the table below indicating how the processes and resources mapped to SDL relate to the 12 security practices defined in Open SAMM. The large diamond symbol is used to indicated where an Open SAMM practice has a very close relationship with a topic within ISO/IEC 27034 and a smaller diamond for weaker relationships.

The ISO/IEC 27034 “life cycle reference model” appears to be most closely aligned with the idea of an organisation-specific “software assurance programme” in SAMM combined with a risk-based approach to applying security to different applications, and within sub-parts of application systems.

We can also see the SAMM construction, verification and deployment practices primarily relate to the ISO/IEC 27034 application security control library used for the overall organisation and individual applications, as well as the actual use of the framework during acquisition/development, deployment and operation of (provisioning and operating) the application.

SAMM is available to download free of charge, and can also be purchased at-cost as a colour soft cover book.

Mapping SAMM to Security Automation

Posted by Colin Watson in Discussion on March 25th, 2012

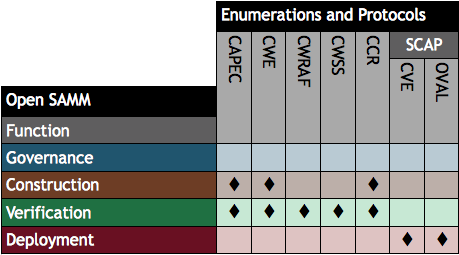

The presentation by Richard Struse (US Department of Homeland Security) and Steve Christey (Mitre) of Risk Analysis and Measurement with CWRAF (PDF) at the IT Security Automation Conference in October 2011 illustrates how software security automation enumerations and protocols map to SAMM’s construction, verification and deployment security practices. The specifications highlighted in the presentation’s final slide are:

- Common Attack Pattern Enumeration and Classification (CAPEC)

- Common Weakness Enumeration (CWE)

- Common Weakness Risk Analysis Framework (CWRAF)

- Common Weakness Scoring System (CWSS)

- CWE Coverage Claims Representation (CCR)

- Security Content Automation Protocol (SCAP)

I have summarised the slide in the table below.

For further security registries, description languages and standardised processes see the Making Security Measurable website. Risk Analysis and Measurement with CWRAF is being presented at AppSec DC 2012 in April.

Jeremy Epstein on the Value of a Maturity Model

Posted by admin in Discussion on June 18th, 2009

Security maturity models are the newest thing, and also a very old idea with a new name. If you look back 25 years to the dreaded Orange Book (also known as the Trusted Computer System Evaluation Criteria or TCSEC), it included two types of requirements – functional (i.e., features) and assurance. The way Orange Book specified assurance is through techniques like design documentation, use of configuration management, formal modeling, trusted distribution, independent testing, etc. Each of the requirements stepped up as the system moved from the lowest levels of assurance (D) to the highest (A1). Or in other words, to get a more secure system, you need a more mature security development process.

Security maturity models are the newest thing, and also a very old idea with a new name. If you look back 25 years to the dreaded Orange Book (also known as the Trusted Computer System Evaluation Criteria or TCSEC), it included two types of requirements – functional (i.e., features) and assurance. The way Orange Book specified assurance is through techniques like design documentation, use of configuration management, formal modeling, trusted distribution, independent testing, etc. Each of the requirements stepped up as the system moved from the lowest levels of assurance (D) to the highest (A1). Or in other words, to get a more secure system, you need a more mature security development process.

As an example, independent testing was a key part of the requirement set – for class C products (C1 and C2) vendors were explicitly required to provide independent testing by “at least two individuals with bachelor degrees in Computer Science or the equivalent. Team members shall be able to follow test plans prepared by the system developer and suggest additions, shall be familiar with the ‘flaw hypothesis’ or equivalent security testing methodology, and shall have assembly level programming experience. Before testing begins, the team members shall have functional knowledge of, and shall have completed the system developer’s internals course for, the system being evaluated.” [TCSEC section 10.1.1] Further, “The team shall have ‘hands-on’ involvement in an independent run of the tests used by the system developer. The team shall independently design and implement at least five system-specific tests in an attempt to circumvent the security mechanisms of the system. The elapsed time devoted to testing shall be at least one month and need not exceed three months. There shall be no fewer than twenty hands-on hours spent carrying out system developer-defined tests and test team-defined tests.” [TCSEC section 10.1.2] The requirements increase as the level of assurance goes up; class A systems require testing by “at least one individual with a bachelor’s degree in Computer Science or the equivalent and at least two individuals with masters’ degrees in Computer Science or equivalent” [TCSEC section 10.3.1] and the effort invested “shall be at least three months and need not exceed six months. There shall be no fewer than fifty hands-on hours per team member spent carrying out system developer-defined tests and test team-defined tests.”

In the past 25 years since the TCSEC, there have been dozens of efforts to define maturity models to emphasize security. Most (probably all!) of them are based on wishful thinking: if only we’d invest more in various processes, we’d get more secure systems. Unfortunately, with very minor exceptions, the recommendations for how to build more secure software are based on “gut feel” and not any metrics.

In early 2008, I was working for a medium sized software vendor. To try to convince my management that they should invest in software security, I contacted friends and friends-of-friends in a dozen software companies, and asked them what techniques and processes their organizations use to improve the security of their products, and what motivated their organizations to make investments in security. The results of that survey showed that there’s tremendous variation from one organization to another, and that some of the lowest-tech solutions like developer training are believed to be most effective. I say “believed to be” because even now, no one has metrics to measure effectiveness. I didn’t call my results a maturity model, but that’s what I found – organizations with radically different maturity models, frequently driven by a single individual who “sees the light”. [A brief summary of the survey was published as “What Measures do Vendors Use for Software Assurance?” at the Making the Business Case for Software Assurance Workshop, Carnegie Mellon University Software Engineering Institute, September 2008. A more complete version is in preparation.]

So how do security maturity models like OpenSAMM and BSIMM fit into this picture? Both have done a great job cataloging, updating, and organizing many of the “rules of thumb” that have been used over the past few decades for investing in software assurance. By defining a common language to describe the techniques we use, these models will enable us to compare one organization to another, and will help organizations understand areas where they may be more or less advanced than their peers. However, they still won’t tell us which techniques are the most cost effective methods to gain assurance.

Which begs the question – which is the better model? My answer is simple: it doesn’t really matter. Both are good structures for comparing an organization to a benchmark. Neither has metrics to show which techniques are cost effective and which are just things that we hope will have a positive impact. We’re not yet at the point of VHS vs. Betamax or BlueRay vs. HD DVD, and we may never get there. Since these are process standards, not technical standards, moving in the direction of either BSIMM or OpenSAMM will help an organization advance – and waiting for the dust to settle just means it will take longer to catch up with other organizations.

Or in short: don’t let the perfect be the enemy of the good. For software assurance, it’s time to get moving now.

About the Author

Jeremy Epstein is Senior Computer Scientist at SRI International where he’s involved in various types of computer security research. Over 20+ years in the security business, Jeremy has done research in multilevel systems and voting equipment, led security product development teams, has been involved in far too many government certifications, and tried his hand at consulting. He’s published dozens of articles in industry magazines and research conferences. Jeremy earned a B.S. from New Mexico Tech and a M.S. from Purdue University.